The Coffee Report

This example shows following aspects:

- Configuring a processing network with data aggregation

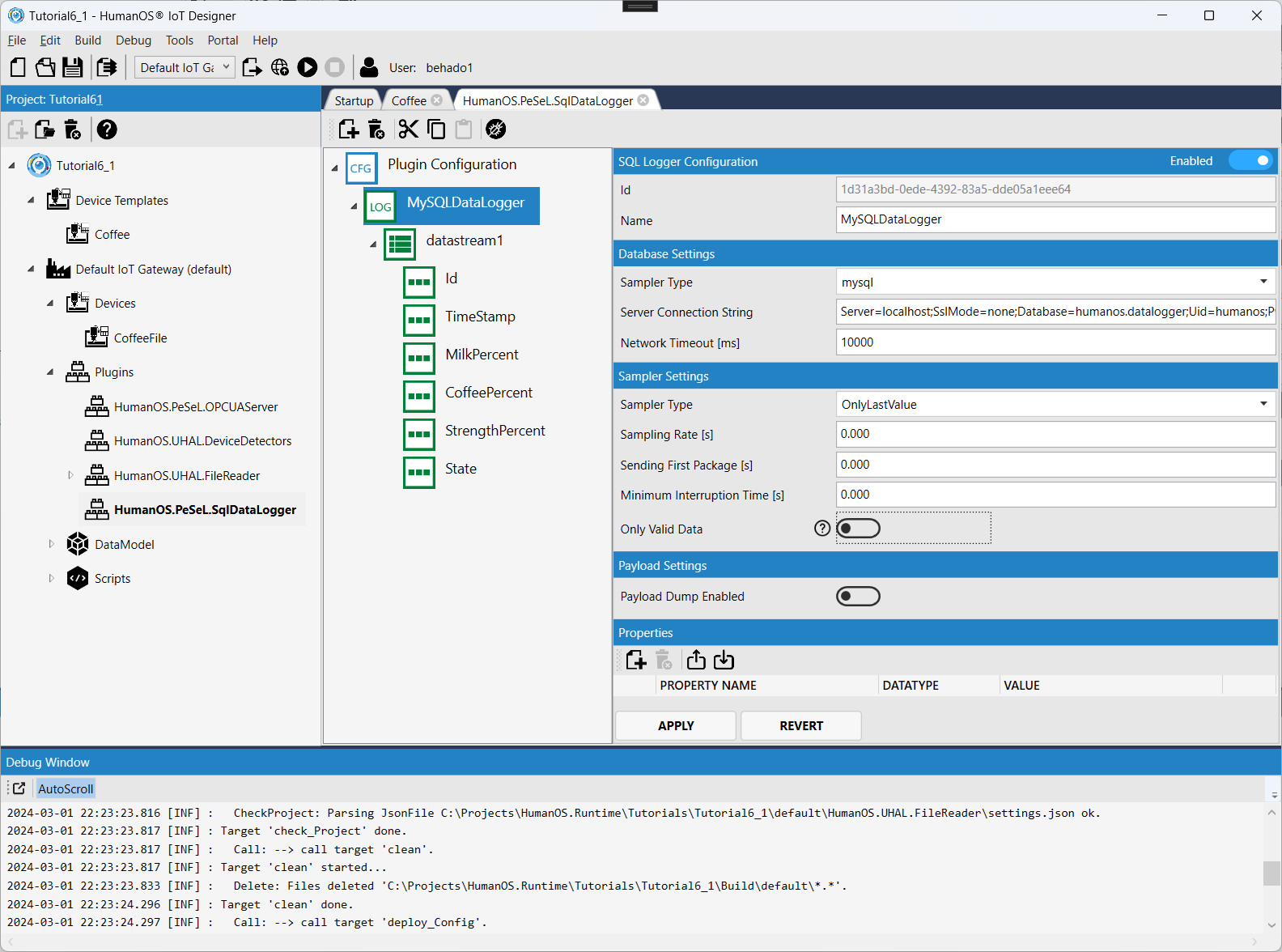

- Configuring a SQL DataLogger

Introduction to SQL DataLogger

Reporting to SQL Database requires an exact definition of the SQL table. The configuration of the mapping between HumanOS data structure (e.g. generic entity) and the SQL table is done within the DataLogger configuration.

Step 1: Create the JSON File

The source of the Coffee Report is a JSON file.

-

Place the JSON file in

C:\Temp\CoffeeProject\coffee.json -

Add following content:

{

"strength": "7/12",

"milkamount": "15ml",

"coffeeamount": "21ml",

"brand": "Nespresso",

"name": "Venezia",

"capsulenetweight": "5,6g"

}

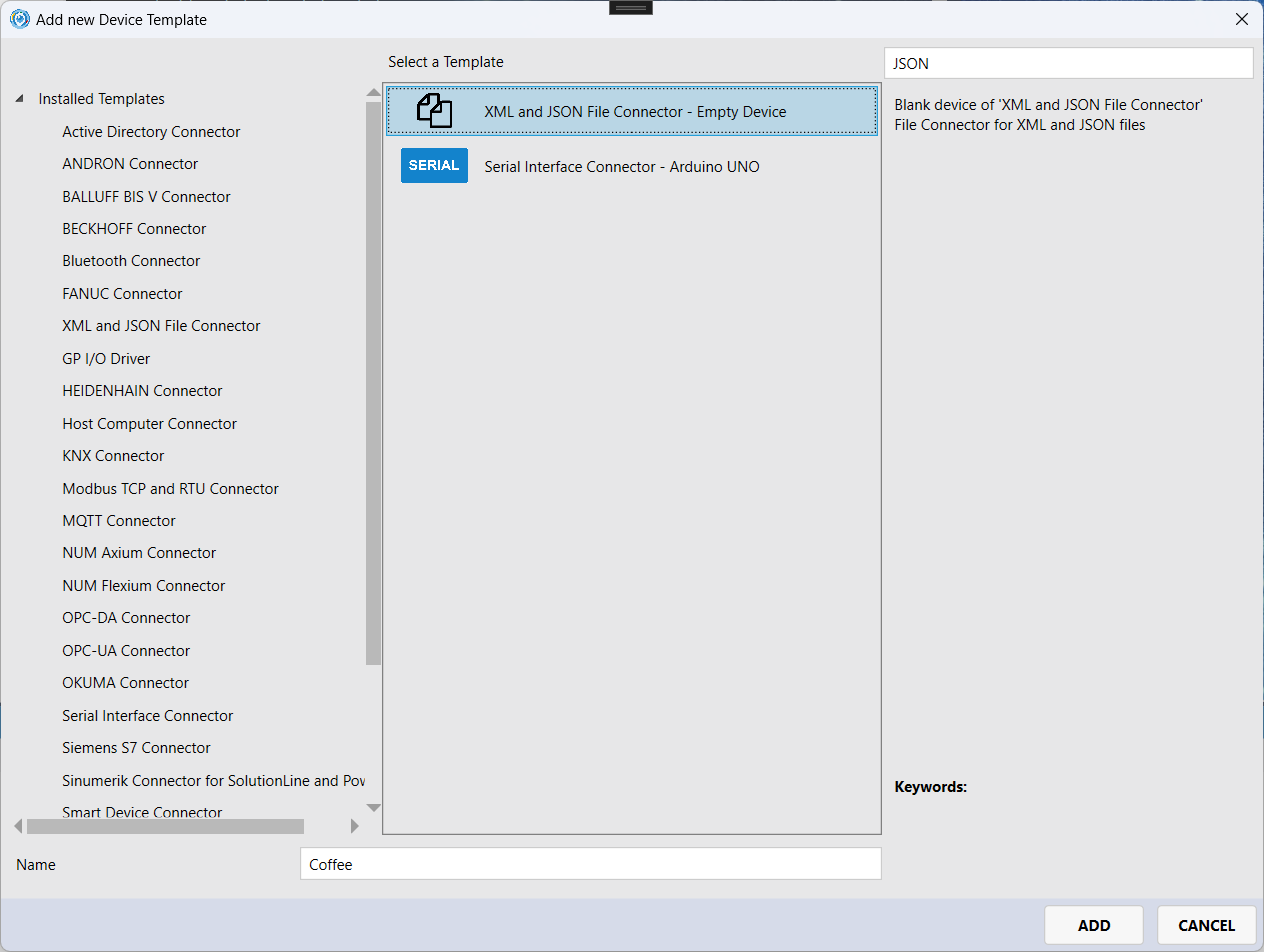

Step 2: Create a Designer Project

-

Create an IoT project with HumanOS IoT Designer. Check Tutorial 1 Example for details about OPC-UA Bridges.

-

Add a new device template for JSON Files called

Coffee.

In this example we use the empty device template.

-

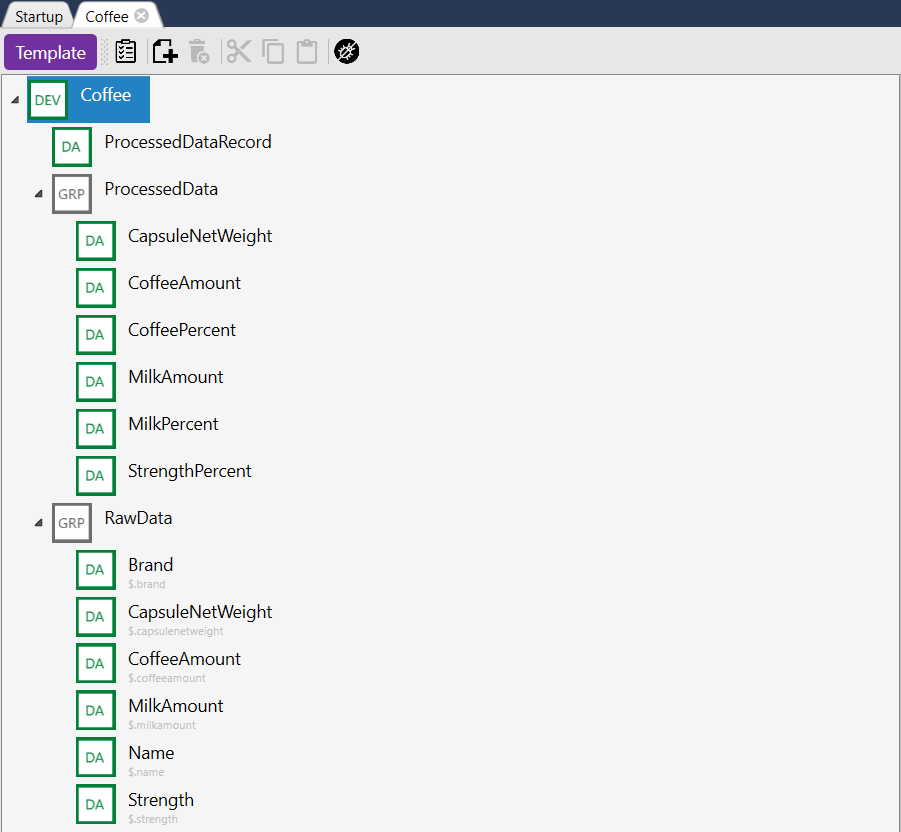

Create a device structure with data nodes

Create the following data nodes and set the PortMatchingIds and data types correctly:

The group RawData contains the data nodes accessing the JSON data.

Name Address PortMatchId Data Type Brand $.brandBrandType System.StringCapsuleNetWeight $.capsulenetweightCapsuleNetWeightType System.StringCoffeeAmount $.coffeeamountCoffeeAmountType System.StringMilkAmount $.milkamountMilkAmountType System.StringName $.nameNameType System.StringStrength $.strengthStrengthType System.StringThe group ProcessedData contains data nodes processed by some processing network.

Name PortMatchId Data Type CapsuleNetWeight CapsuleNetWeightProcType System.DoubleCoffeeAmount CoffeeAmountProcType System.DoubleCoffeePercent CoffeePercentProcType System.DoubleMilkAmount MilkAmountProcType System.DoubleMilkPercent MilkPercentProcType System.DoubleStrengthPercent StrengthPercentProcType System.DoubleNOTEThese data nodes do not have any address, since the data is not coming from a data source.

The data node

ProcessedDatacontains the aggregated data for SQL data logger.Name PortMatchId Data Type ProcessedDataRecord ProcessedDataRecordType System.Double -

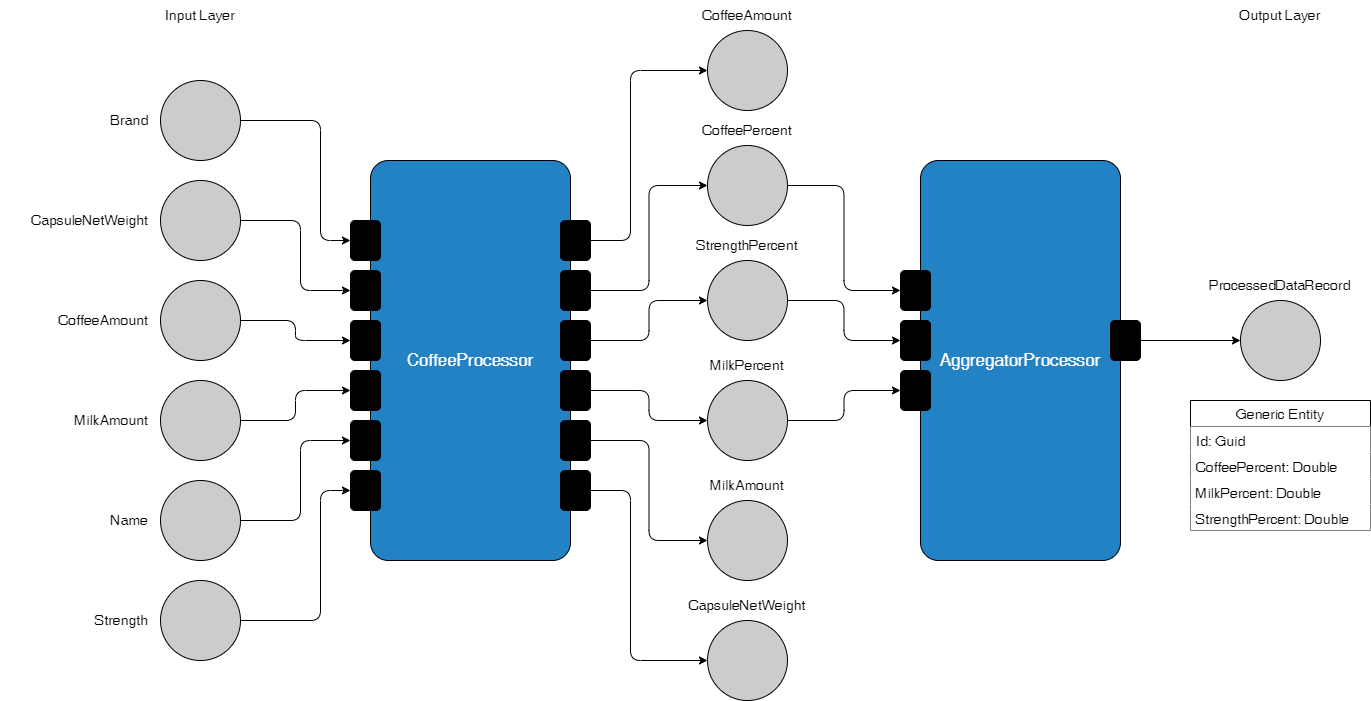

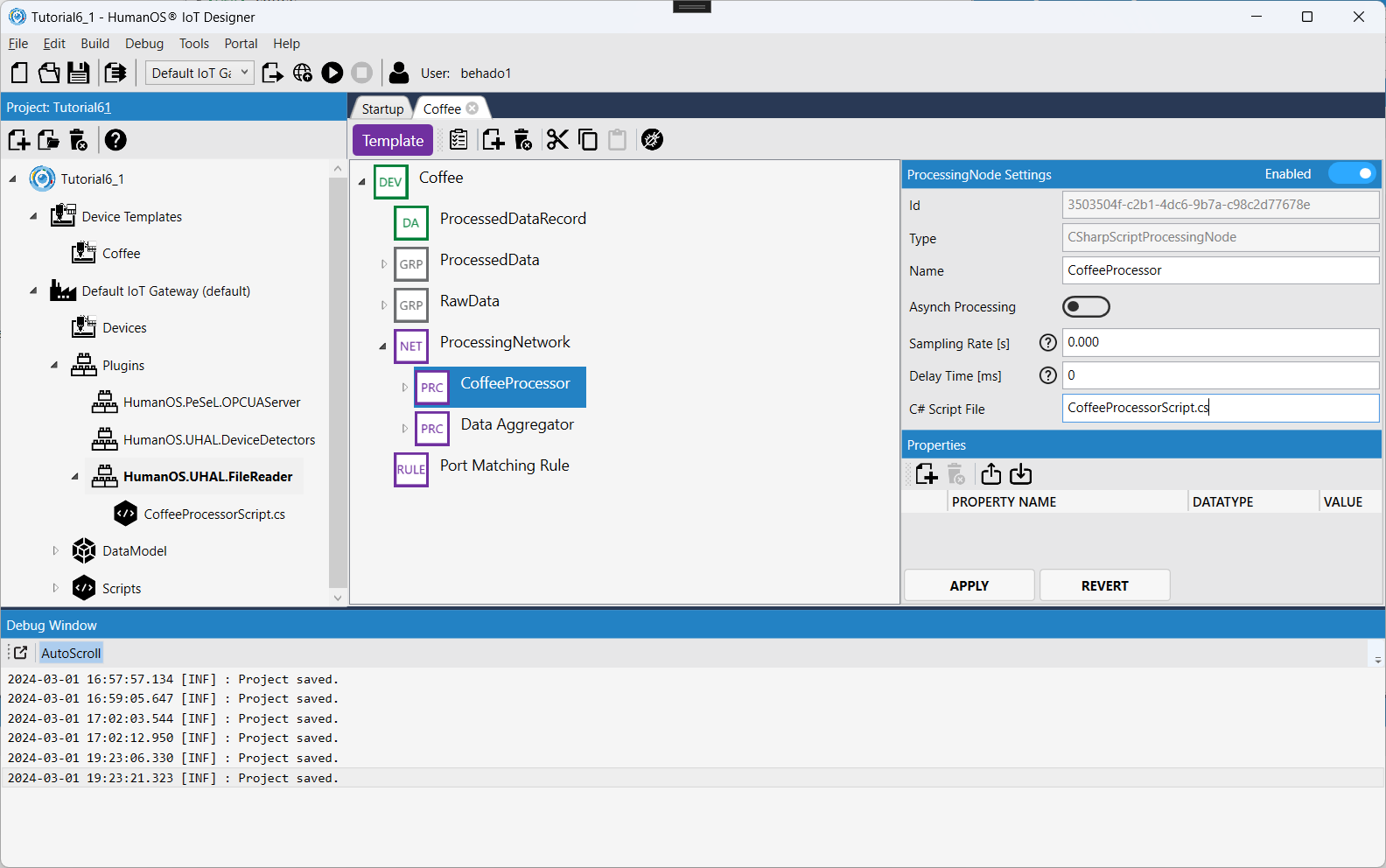

Create a processing network.

- Create

CoffeeProcessoras CSharpScripted Processor - Create

AggregateProcessoras DataAggregator

NOTEMake sure that the port matching is correctly configured.

- Check that data type of data node and port matches

- Check that PortMatchId is correctly set on ports

- Check that the protocol is set to

EventPassing - Add PortMatchingRule to your device.

- Create

-

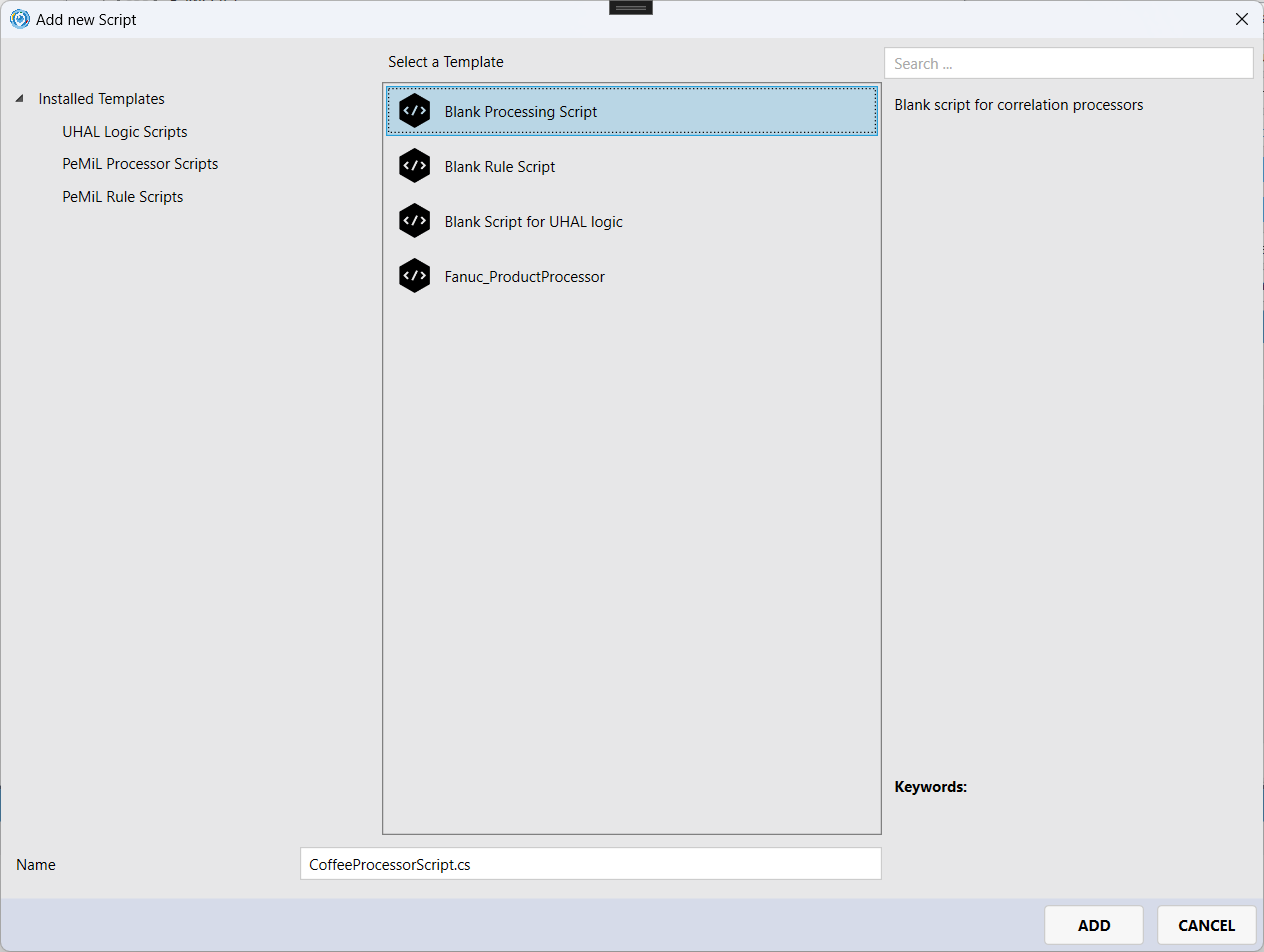

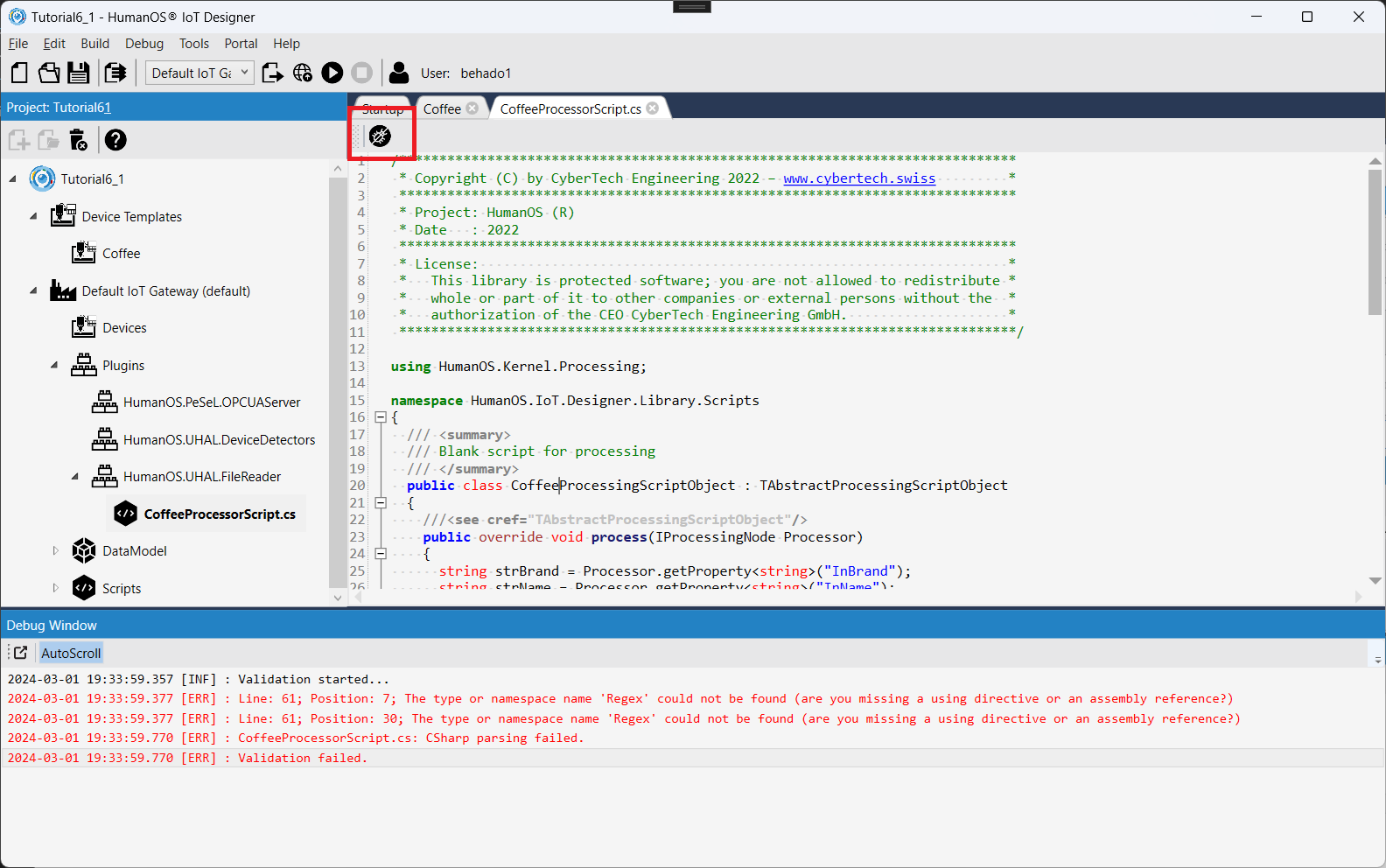

Create a C# Script to transform the input values from JSON file into numerical values of our network.

-

Right click on the plugin

HumanOS.UHAL.FileReaderand selectAdd New Item. -

Create a processing script called

CoffeeProcessorScript.cs.

- Go to the device information file and select the CoffeeProcessor. Set the property

C# Script FiletoCoffeeProcessorScript.cs.

-

-

Write the processor script to convert the data

Following routine can be used to extract the numerical values from strings like

15g:/// gets a double value from a string

private double getDoubleValue(string strValue)

{

double fRetval = double.NaN;

Regex TakeValues = new Regex("^[\\d|\\.|\\,]+");

string strLetterfree = TakeValues.Match(strValue).Value.Replace(",", ".");

if (!string.IsNullOrEmpty(strLetterfree))

{

fRetval = double.Parse(strLetterfree);

}

return fRetval;

}NOTEFor regular expression syntax, check the Online RegExp Tester.

For available namespaces and classes, check the NameSpace and Class List.

-

Validate your Script file before running the gateway:

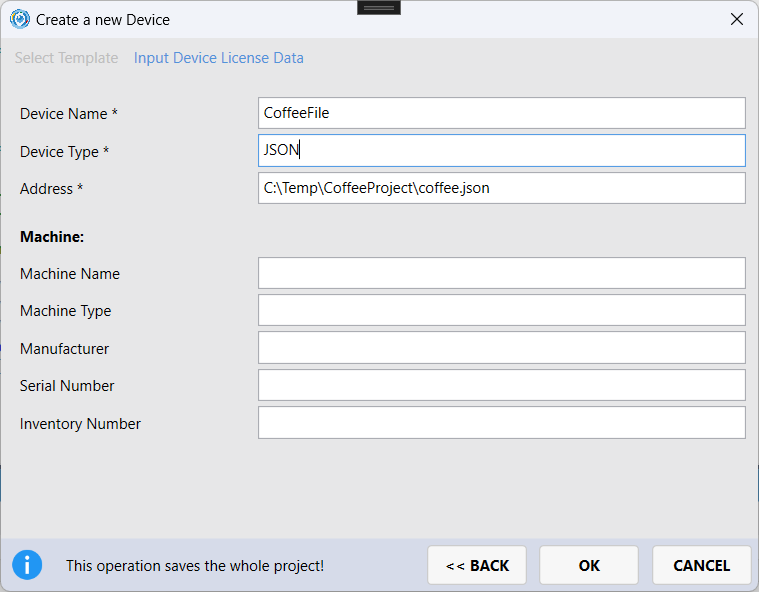

Step 3: Create JSON Device

The coffee device template is now ready. In this step we create a new concrete device out of this template:

-

Create a device within the

default-Target. NOTE

NOTEUse the address

C:\Temp\CoffeeProject\coffee.jsonto connect to the JSON file. -

Build and run HumanOS IoT Gateway

-

Connect with OPC-UA client to the OPC-UA server. Use the connection address

opc.tcp://localhost:4840.

Step 4: Change Content in JSON

Open the JSON file in an editor, e.g. Visual Studio Code.

Try the following changes. What happens on the output?

-

Change the

milkamounttounknown. What happens to the value in OPC-UA?Answer

The processed values change to 'nan' (not a number).

-

Change the JSON to be an invalid document. What changes in OPC-UA?

Answer

The processed and aggregated values change its state to 'BadStateNotActive'.

The raw data nodes change their state to 'BadDataUnavailable'

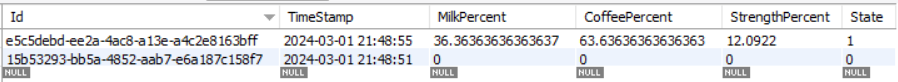

Step 5: Configure SQL DataBase

The next step is to prepare the SQL data base. The goal is to report the aggregated data to an SQL table.

The example works for MySQL, MariaDB, MSSQL and AzureSQL.

The example illustrates the procedure MySQL.

-

Create an new SQL Table in your DataBase, e.g.

humanos.datalogger.CREATE SCHEMA `humanos.datalogger` ; -

Create a table, e.g.

datastream1.- We are using the field

Idof typeSystem.Guidas a primary key in our table. - The field

TimeStampshould represent the timestamp of the data record. - The field

Stateshould represent the data state of the record (Good or BadNotActive).

CREATE TABLE `datastream1` (

`Id` varchar(45) NOT NULL,

`TimeStamp` datetime DEFAULT NULL,

`MilkPercent` double DEFAULT NULL,

`CoffeePercent` double DEFAULT NULL,

`StrengthPercent` double DEFAULT NULL,

`State` int(11) DEFAULT NULL,

PRIMARY KEY (`Id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci; - We are using the field

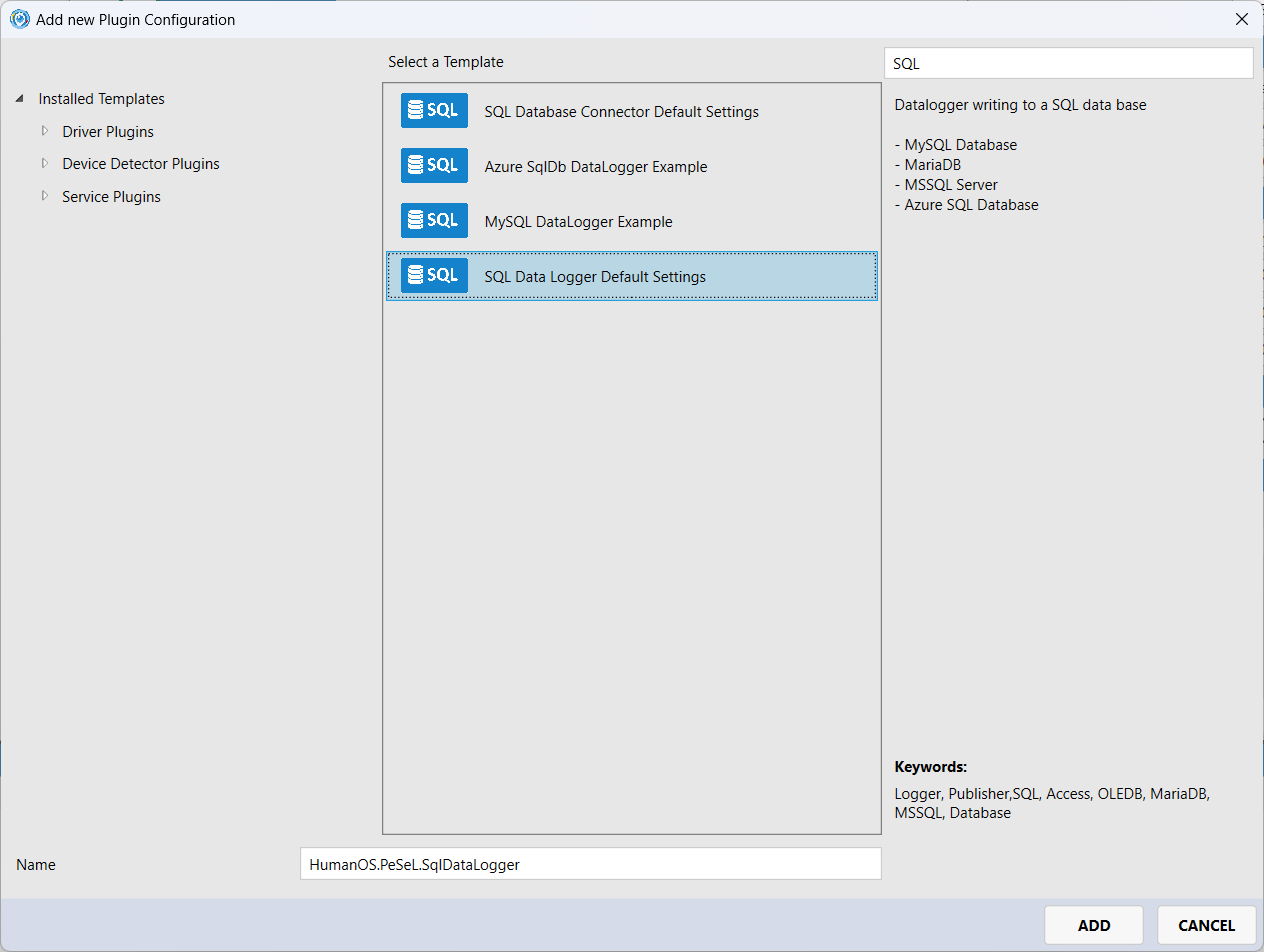

Step 6: Configure SQL DataLogger

-

Right click on

PluginsandAdd new Plugin. -

Select the SQL Data logger plugin

-

Configure the Data Logger

- Select the

SamplerTypetomysql - The server connection string, like

Server=localhost;SslMode=none;Database=humanos.datalogger;Uid=user;Pwd=mypassword; - Sampler type to

OnlyLastValue - Sampling Rate to

0(zero)

- Select the

-

Configure a data set with node filter

node.hasProperty<bool>("EnableSql", true):::node NOTE

The property

EnableSqlmust be set on the data nodeProcessedDataRecord. -

Create the data set fields. Each field represents a column in your SQL table. The field name must match the column name.

- To select the correct entity field, set the Data Query. For instance, to select the

MilkPercentfield, useitem.getValue<double>("MilkPercent").

- To select the correct entity field, set the Data Query. For instance, to select the

-

Build and run HumanOS IoT Gateway

-

Check the table content of your SQL DataBase.

NOTE

NOTENote, that values with state BadNotActive are also reported. To prevent logging such data, select the data logger option

Only Valid Data.

Questions

-

The data within one row of your SQL table contains inconsistent values. Often there are several lines added at once, where as the final line contains the correct data set. What is the cause? And what must be done?

Answer

Since our CoffeeProcessor script processes the data sequently, each time writing the property an event is passed to the data aggregator processor. And each time, the data aggregator is triggered and produces an entity from the current values on its inputs.

The solution is to collect first all data before the data aggregator is triggered. There are two ways:

- Use the

DelayTimeproperty to delay the inputs on the data aggregator. - Use one of the input port as a trigger port, and set the

TriggerTypeof all other ports tonone. Then make sure that sending data to the trigger port is the last instruction of your script file.

- Use the

-

There are "empty" lines in your SQL Table, especially when starting the gateway. How can you get rid of these empty lines?

Answer

These empty line are coming from the fact, that all data is processed by the data logger, even if the data state is

BadNotActive.To prevent logging such data, select the data logger option

Only Valid Data.

-

The data aggregator gets triggered even when some of the data node has no valid data. How can you change the behavior, that only valid data are processed by the data aggregator?

Answer

By default, all changes on any input port triggers the processor.

To prevent that, select the option

Mandatory Port. The processor is triggered only if all mandatory ports have valid data.