Introduction to Data Aggregation

The data aggregation is used to combine a set of data to one structure, called Entity.

Often, there is the need to bundle different single data values. This is useful if they belong together or if we want them to be submitted as one event to a target platform.

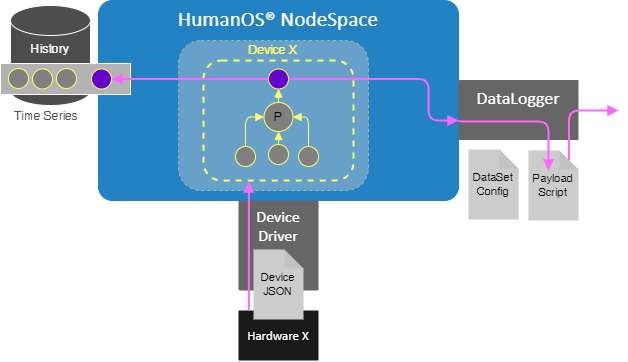

Following figure shows the basic setup of data aggregation.

- Data values are acquired on a device (or other places)

- A data aggregator processor

Pcombines the value to an entity. - The entity is a new "complex" data value.

- It can be stored in a history time series

- passed to data loggers or other services for external systems

The Use Case

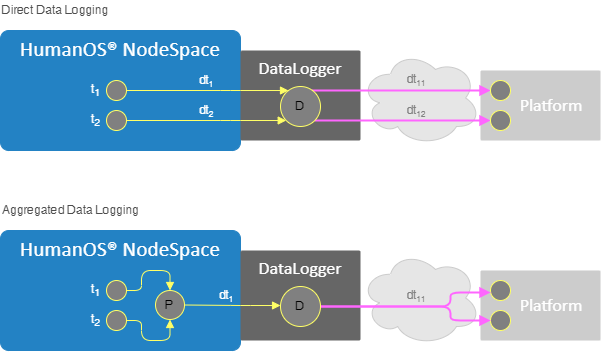

Following phenomena happens using the direct data logging.

Assume two events are recorded on data node, e1 at t1 and e2 later at t2.

Due to the asynchronous processing and passing to different systems like data logger D, networks and platform services, it might happen that event e2 arrives earlier than e1.

Using the data aggregator very early in the processing chain can fix this misbehavior. Both events are coupled together and sent at once.

Event Driven Data Aggregation

The use case is to collect the complete entity each time one of the data values changes.

Sampled Data Aggregation

The data aggregation happens after n-seconds, even the input data values did not change.

Compatible Services

Following services support the aggregated data as entity.

- SQL Data Logger. This logger directly passes an entity to a table and matches each entity field name with the column name of the table.

- Loggers like MQTT, AzureIoT and RmQ support entities and can transform them via C# scripts to the desired payload.

- OPC-UA Server. The OPC-UA server passes entities as JSON document.